![]()

Real World Deep Neural Networks

Deep Learning – is a set of algorithms based on multi-layer networks including supervised neural networks and unsupervised neural networks.

Supervised neural network architectures include :

- Multi-layer Perceptrons

- Convolutional Neural Network ( LeCun)

- Recurrent Neural nets (Schmidhuber )

Unsupervised neural networks include:

- Deep Belief Nets / Stacked RBMs (Hinton)

- Stacked denoising autoencoders (Bengio)

- Sparse AutoEncoders ( LeCun, A. Ng, )

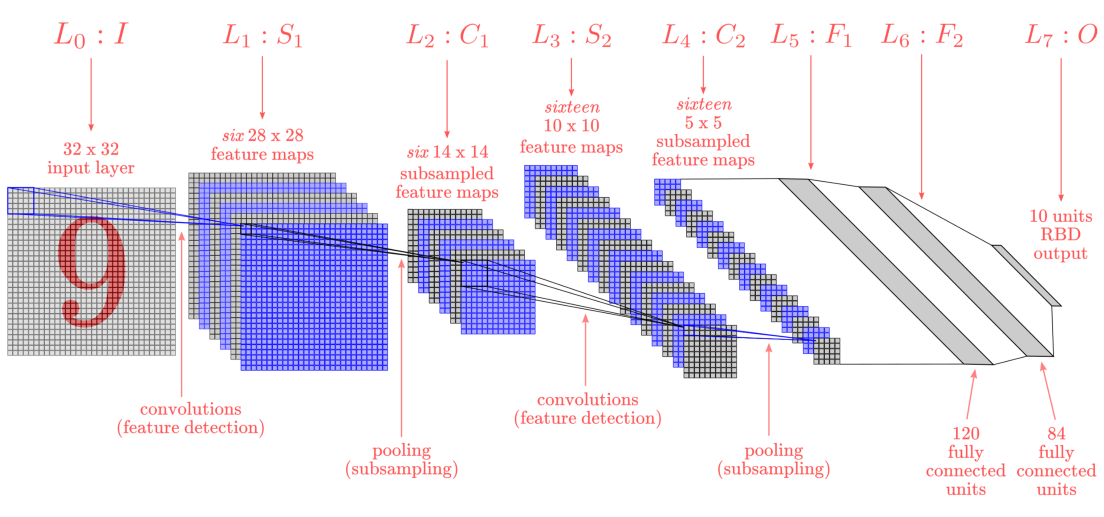

LeNET 5

One of the first published convolutional neural networks whose benefit was first demonstrated by Yann Lecun, then a researcher at AT&T Bell Labs, for the purpose of recognizing handwritten digits in images—LeNet5102.

In the 90s, their experiments with LeNet gave the first compelling evidence that it was possible to train convolutional neural networks by backpropagation.

Their model achieved outstanding results at the time(1990s and was adopted to recognize digits for processing deposits in ATM machines.

Some ATMs still run the code that Yann and his colleague Leon Bottou wrote in the 1990s!

Figure : Lenet 5 architecture for digit recognition

AlexNet

AlexNet was introduced in 2012, named after Alex Krizhevsky, the first author of the breakthrough ImageNet classification paper (Krizhevsky et al., 2012).

AlexNet, which employed an 8-layer convolutional neural network, won the ImageNet Large Scale Visual Recognition Challenge 2012 by a phenomenally large margin.

This network proved, for the first time, that the features obtained by learning can transcend manually-design features, breaking the previous paradigm in computer vision.

The architectures of AlexNet and LeNet are very similar.

Figure : Lenet(left) and alexnet(right)