![]()

Here’s a good explanation of exploratory data analysis:

“Exploratory Data Analysis refers to the critical process of performing initial investigations on data so as to discover patterns, to spot anomalies, to test hypotheses and to check assumptions with the help of summary statistics and graphical representations.”

Before making inferences from data it is essential to examine all your variables.

Listening to data is important for many reasons, including :

- To catch mistakes

- To see patterns in the data

- To find violations of statistical assumptions

- To generate hypotheses

If you don’t listen to your data, you will have trouble later.

Types of Data

First of all you need to understand, the various types of data that you have in your dataset. Understanding the types of data involved is key to analyzing these and being able to process these for further use.

Nominal

ID numbers, Names of people

Categorical

Eye Color, Zip Codes

Ordinal

Rankings (e.g., taste of potato chips on a scale from 1-10), grades, height in {tall, medium, short}

Interval

Calendar dates, temperatures in Celsius or Fahrenheit, GRE(Graduate Record Examination) and IQ scores

Ratio

Temperature in Kelvin, length, time, counts

Here’s the categorization of data/measurements as qualitative or quantitative :-

Dimensionality of Data

The next important step is understanding the dimensionality of the dataset.

What Are the Key Properties of a Dataset?

Prior to performing any type of statistical analysis, understanding the nature of the data being analyzed is essential.

You can use EDA to identify the properties of a dataset to determine the most appropriate statistical methods to apply to the data.

You can investigate several types of properties with EDA techniques, including the following:-

- The center of the data

- The spread among the members of the data

- The skewness of the data

- The probability distribution of the data follows

- The correlation among the elements in the dataset

- Whether or not the parameters of the data are constant over time

- The presence of outliers in the data

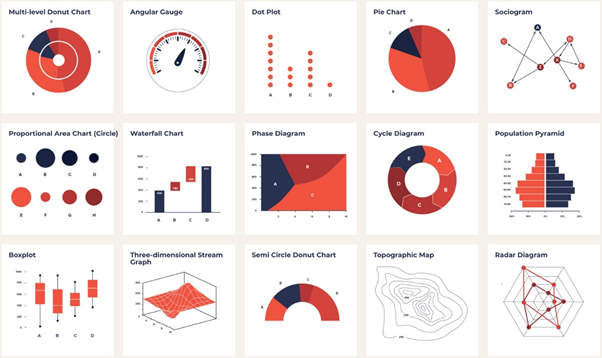

Visualization Techniques for Exploratory Data Analysis

1). Correlation Matrix Plots

2). Density Plots

3). Scatter Plots

4). Bubble Plots

Correlation Matrix Plots

If you have a data set with many columns, a good way to quickly check correlations among columns is by visualizing the correlation matrix as a heatmap.

To use linear regression for modelling, its necessary to remove correlated variables to improve your model. One can find correlations using pandas “.corr()” function and can visualize the correlation matrix using a heatmap in seaborn.

sns.heatmap(df.corr(),cmap=’viridis’, annot=False)

Dark shades represents positive correlation while lighter shades represents negative correlation.

If you set annot=True, you’ll get values by which features are correlated to each other in grid-cells.

1). Here we can infer that “density” has strong positive correlation with “residual sugar” whereas it has strong negative correlation with “alcohol”.

2). “free sulphur dioxide” and “citric acid” has almost no correlation with “quality”.

3). Since correlation is zero we can infer there is no linear relationship between these two predictors. However it is safe to drop these features in case you’re applying Linear Regression model to the dataset.

Density Plots

Density plots are another way of getting a quick idea of the distribution of each attribute. The plots look like an abstracted histogram with a smooth curve drawn through the top of each bin, much like your eye tried to do with the histograms.

# Univariate Density Plots

import matplotlib.pyplot as plt

import pandas

url = “https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv“

names = [‘preg’, ‘plas’, ‘pres’, ‘skin’, ‘test’, ‘mass’, ‘pedi’, ‘age’, ‘class’]

data = pandas.read_csv(url, names=names)

data.plot(kind=’density’, subplots=True, layout=(3,3), sharex=False)

plt.show()

We can see the distribution for each attribute is clearer with the histograms.

Scatter Plots

Another common visualization techniques is a scatter plot that is a two-dimensional plot representing the joint variation of two data items. Each marker (symbols such as dots, squares and plus signs) represents an observation. The marker position indicates the value for each observation. When you assign more than two measures, a scatter plot matrix is produced that is a series of scatter plots displaying every possible pairing of the measures that are assigned to the visualization. Scatter plots are used for examining the relationship, or correlations, between X and Y variables.

Despite their simplicity, scatter plots are a powerful tool for visualizing data. There’s a lot of options, flexibility, and representational power that comes with the simple change of a few parameters like color, size, shape, and regression plotting.

Regression Plotting

When we first plot our data on a scatter plot it already gives us a nice quick overview of our data. In the far left figure below, we can already see the groups where most of the data seems to bunch up and can quickly pick out the outliers.

But it’s also nice to be able to see how complicated our task might get; we can do that with regression plotting. In the middle figure below we’ve done a linear plot. It’s pretty easy to see that a linear function won’t work as many of the points are pretty far away from the line. The far-right feature uses a polynomial of order 4 and looks much more promising. So it looks like we’ll definitely need something of at least order 4 to model this dataset.

Code

import seaborn as sns

import matplotlib.pyplot as plt

df = sns.load_dataset(‘iris’)

# A regular scatter plot

sns.regplot(x=df[“sepal_length”], y=df[“sepal_width”], fit_reg=False)

plt.show()

# A scatter plot with a linear regression fit:

sns.regplot(x=df[“sepal_length”], y=df[“sepal_width”], fit_reg=True)

plt.show()

# A scatter plot with a polynomial regression fit:

sns.regplot(x=df[“sepal_length”], y=df[“sepal_width”], fit_reg=True, order=4)

plt.show()

Marginal Histogram

Scatter plots with marginal histograms are those which have plotted histograms on the top and side, representing the distribution of the points for the features along the x- and y- axes. It’s a small addition but great for seeing the exact distribution of our points and more accurately identify our outliers.

For example, in the figure below we can see that the why axis has a very heavy concentration of points around 3.0. Just how concentrated? That’s most easily seen in the histogram on the far right, which shows that there is at least triple as many points around 3.0 as there are for any other discrete range. We also see that there’s barely any points above 3.75 in comparison to other ranges. For the x-axis on the other hand, things are a bit more evened out, except for the outliers on the far right.

Code

import seaborn as sns

import matplotlib.pyplot as plt

df = sns.load_dataset(‘iris’)

sns.jointplot(x=df[“sepal_length”], y=df[“sepal_width”], kind=’scatter’)

plt.show()

References

Scatterplot Matrix

A scatterplot shows the relationship between two variables as dots in two dimensions, one axis for each attribute. You can create a scatterplot for each pair of attributes in your data. Drawing all these scatterplots together is called a scatterplot matrix.

Scatter plots are useful for spotting structured relationships between variables, like whether you could summarize the relationship between two variables with a line. Attributes with structured relationships may also be correlated and good candidates for removal from your dataset.

# Scatterplot Matrix

import matplotlib.pyplot as plt

import pandas

from pandas.plotting import scatter_matrix

url = “https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.csv“

names = [‘preg’, ‘plas’, ‘pres’, ‘skin’, ‘test’, ‘mass’, ‘pedi’, ‘age’, ‘class’]

data = pandas.read_csv(url, names=names)

scatter_matrix(data)

plt.show()

Like the Correlation Matrix Plot, the scatterplot matrix is symmetrical. This is useful to look at the pair-wise relationships from different perspectives. Because there is little point oi drawing a scatterplot of each variable with itself, the diagonal shows histograms of each attribute.

Bubble Plots

With bubble plots we are able to use several variables to encode information. The new one we will add here is size. In the figure below we are plotting the number of french fries eaten by each person vs their height and weight. Notice that a scatter plot is only a 2D visualization tool, but that using different attributes we can represent 3-dimensional information.

Here we are using color, position, and size. The position determines the person’s height and weight, the color determines the gender, and the size determines the number of french fries eaten! The bubble plot lets us conveniently combine all of the attributes into one plot so that we can see the high-dimensional information in a simple 2D view; nothing crazy complicated.

Word Clouds and Network Diagrams for Unstructured Data

The variety of big data brings challenges because semi-structured and unstructured data require new visualization techniques. A word cloud visual represents the frequency of a word within a body of text with its relative size in the cloud. This technique is used on unstructured data as a way to display high- or low-frequency words.

Another visualization technique that can be used for semistructured or unstructured data is the network diagram. Network diagrams represent relationships as nodes (individual actors within the network) and ties (relationships between the individuals). They are used in many applications, for example for analysis of social networks or mapping product sales across geographic areas.

References

https://www.kdnuggets.com/2019/04/best-data-visualization-techniques.html