![]()

Q1. What is the minimum no. of variables/ features required to perform clustering?

A. 0

B. 1

C. 2

D. 3

Solution: (B)

At least a single variable is required to perform clustering analysis. Clustering analysis with a single variable can be visualized with the help of a histogram.

Q2. For two runs of K-Mean clustering is it expected to get same clustering results ?

A. Yes

B. No

Solution: (B)

K-Means clustering algorithm instead converses on local minima which might also correspond to the global minima in some cases but not always. Therefore, it’s advised to run the K-Means algorithm multiple times before drawing inferences about the clusters.

However, note that it’s possible to receive same clustering results from K-means by setting the same seed value for each run. But that is done by simply making the algorithm choose the set of same random no. for each run.

Q3. Is it possible that assignment of observations to clusters does not change between successive iterations in K-Means

A. Yes

B. No

C. Can’t say

D. None of these

Solution: (A)

When the K-Means algorithm has reached the local or global minima, it will not alter the assignment of data points to clusters for two successive iterations.

Q4. Which of the following can act as possible termination conditions in K-Means?

- For a fixed number of iterations.

- Assignment of observations to clusters does not change between iterations. Except for cases with a bad local minimum.

- Centroids do not change between successive iterations.

- Terminate when RSS falls below a threshold.

Options:

A. 1, 3 and 4

B. 1, 2 and 3

C. 1, 2 and 4

D. All of the above

Solution: (D)

All four conditions can be used as possible termination condition in K-Means clustering:

- This condition limits the runtime of the clustering algorithm, but in some cases the quality of the clustering will be poor because of an insufficient number of iterations.

- Except for cases with a bad local minimum, this produces a good clustering, but runtimes may be unacceptably long.

- This also ensures that the algorithm has converged at the minima.

- Terminate when RSS falls below a threshold. This criterion ensures that the clustering is of a desired quality after termination. Practically, it’s a good practice to combine it with a bound on the number of iterations to guarantee termination.

Q5. Which of the following algorithm is most sensitive to outliers?

A. K-means clustering algorithm

B. K-medians clustering algorithm

C. K-modes clustering algorithm

D. K-medoids clustering algorithm

Solution: (A)

Out of all the options, K-Means clustering algorithm is most sensitive to outliers as it uses the mean of cluster data points to find the cluster center.

Q6. Assume, you want to cluster 7 observations into 3 clusters using K-Means clustering algorithm. After first iteration clusters, C1, C2, C3 has following observations:

C1: {(2,2), (4,4), (6,6)}

C2: {(0,4), (4,0)}

C3: {(5,5), (9,9)}

What will be the cluster centroids if you want to proceed for second iteration?

A. C1: (4,4), C2: (2,2), C3: (7,7)

B. C1: (6,6), C2: (4,4), C3: (9,9)

C. C1: (2,2), C2: (0,0), C3: (5,5)

D. None of these

Solution: (A)

Finding centroid for data points in cluster C1 = ((2+4+6)/3, (2+4+6)/3) = (4, 4)

Finding centroid for data points in cluster C2 = ((0+4)/2, (4+0)/2) = (2, 2)

Finding centroid for data points in cluster C3 = ((5+9)/2, (5+9)/2) = (7, 7)

Hence, C1: (4,4), C2: (2,2), C3: (7,7)

Q7. Feature scaling is an important step before applying K-Mean algorithm. What is reason behind this?

A. In distance calculation it will give the same weights for all features

B. You always get the same clusters. If you use or don’t use feature scaling

C. In Manhattan distance it is an important step but in Euclidian it is not

D. None of these

Solution; (A)

Feature scaling ensures that all the features get same weight in the clustering analysis. Consider a scenario of clustering people based on their weights (in KG) with range 55-110 and height (in inches) with range 5.6 to 6.4. In this case, the clusters produced without scaling can be very misleading as the range of weight is much higher than that of height. Therefore, its necessary to bring them to same scale so that they have equal weightage on the clustering result.

Q8. Which of the following method is used for finding optimal of cluster in K-Mean algorithm?

A. Elbow method

B. Manhattan method

C. Euclidian mehthod

D. All of the above

E. None of these

Solution: (A)

Out of the given options, only elbow method is used for finding the optimal number of clusters. The elbow method looks at the percentage of variance explained as a function of the number of clusters: One should choose a number of clusters so that adding another cluster doesn’t give much better modeling of the data.

Q9. Which of the following can be applied to get good results for K-means algorithm corresponding to global minima?

- Try to run algorithm for different centroid initialization

- Adjust number of iterations

- Find out the optimal number of clusters

Options:

A. 2 and 3

B. 1 and 3

C. 1 and 2

D. All of above

Solution: (D)

All of these are standard practices that are used in order to obtain good clustering results.

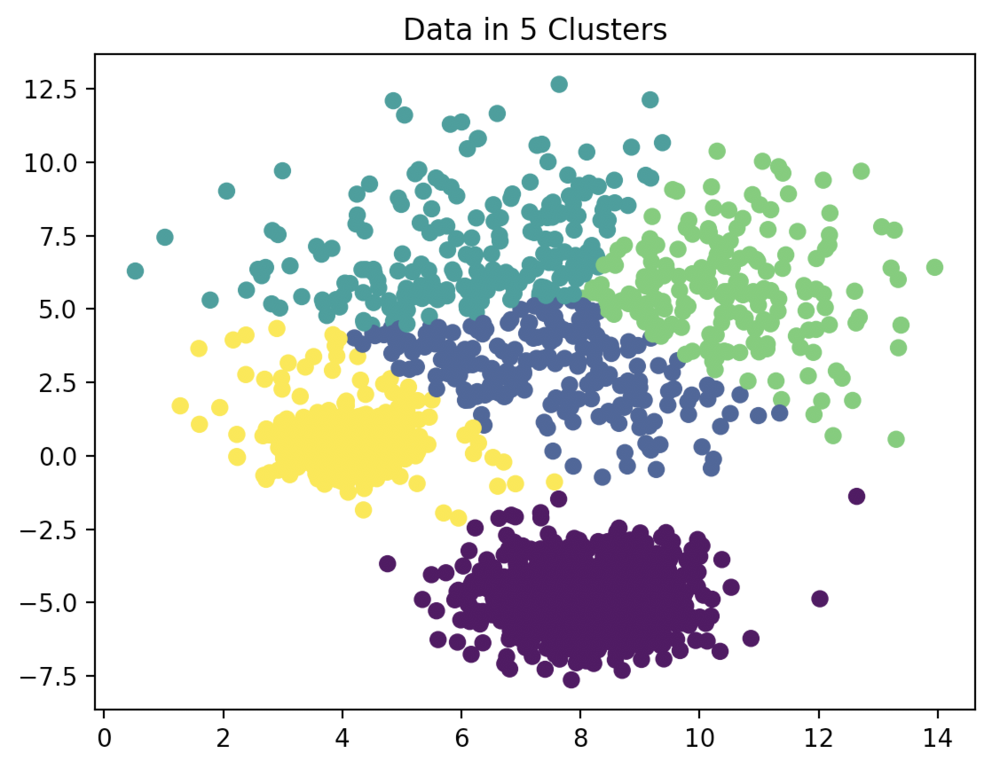

Q10. What should be the best choice for number of clusters based on the following results:

A. 5

B. 6

C. 14

D. Greater than 14

Solution: (B)

Based on the above results, the best choice of number of clusters using elbow method is 6.

Related

Python Multiple Choice Questions with Answers(Python MCQs) – Technology Magazine (tech-mags.com)