Artificial Neural Networks

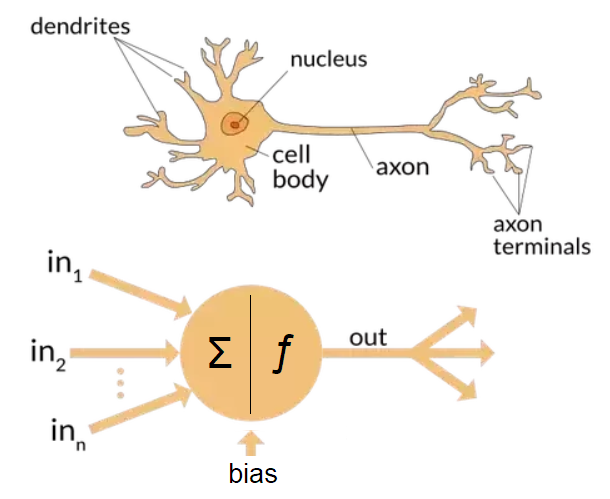

ANN are mathematical models that mimic a highly simplified model of neural networks in brain cells. ANN’s learn to recognize and classify patterns and are able to predict values.

Perceptron : An Artificial Neuron

Perceptron is an artificial neuron that was developed by Frank Rosenblatt in 1957 and can be considered as the simplest artificial neural network.

Perceptrons had perhaps the most far-reaching impact of any of the early neural nets. Several different types of Perceptrons have been used and described by various workers.

The original Perceptrons had three layers of neurons – sensory units, associator units and a response unit – forming an approximate model of a retina. Under suitable assumptions, its iterative learning procedure can be proved to converge to the correct weights i.e., the weights that allow the net to produce the correct output value for each of the training input patterns

The architecture of a simple perceptron for performing single classification is shown in the figure :-

The goal of the net is to classify each input pattern as belonging, or not belonging, to a particular class. Belonging is signified by the output unit giving a response of +1; not belonging is indicated by a response of -1.

A zero output means that it is not decisive.

Perceptron as Linear Classifier

Perceptron Learning Rule

Learning happens by adjusting weights. Perceptron learning rule is :-

Here η is the learning rate, 0<η ≤ 1. t is the target output of the current example. O is the output obtained by the Perceptron.

Limitation of Simple Perceptron

-

Only work for linearly separable data

-

Useful for only simple classification scenarios

Applications of Perceptron

Perceptron can be used only for linearly separable data:-

-

SPAM filter

-

Web pages classification

However, if we use a transformation of the data to another space in which data separation is easier (kernels), perceptrons can have many more applications.

It is well known that neural networks with several layers of artificial neurons (multilayer perceptron) can be used as universal approximators.

Code Examples

Although Perceptron implementation is relatively straight forward, it is a good idea to use existing libraries for quick exploration. In this section, we first go through Perceptron examples using scikit learn library.

Digit Recognition Example

#Imports

from sklearn.datasets import load_digits

from sklearn.linear_model import Perceptron

from sklearn.metrics import f1_score, classification_report

#Loads

X, y = load_digits(return_X_y=True)

#Training Model

clf = Perceptron(tol=1e-3, random_state=0)

clf.fit(X, y)

#Printing classification Score

print(“Classification Score : “,clf.score(X, y))

predictions = clf.predict(X)

print(classification_report(y, predictions))

Iris Recognition Using Perceptron

# Load required libraries

from sklearn import datasets

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import Perceptron

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.metrics import f1_score, classification_report

import numpy as np

# Load the iris dataset

iris = datasets.load_iris()

# Create our X and y data

X = iris.data

y = iris.target

# Train the scaler, which standardized all the features to have mean=0 and # unit variance

sc = StandardScaler()

sc.fit(X)

# Apply the scaler to the X training data

X_std = sc.transform(X)

# Split the data into 70% training data and 30% test data

X_train, X_test, y_train, y_test = train_test_split(X_std, y, test_size=0.3)

# Create a perceptron object with the parameters: 40 iterations (epochs) over # the data, and a learning rate of 0.1

model = Perceptron(n_iter=40, eta0=0.1, random_state=0)

# Train the perceptron

model.fit(X_train, y_train)

# Apply the trained perceptron on the X data to make predicts for the y test #data

y_pred = model.predict(X_test)

print(classification_report(y_test, y_pred))